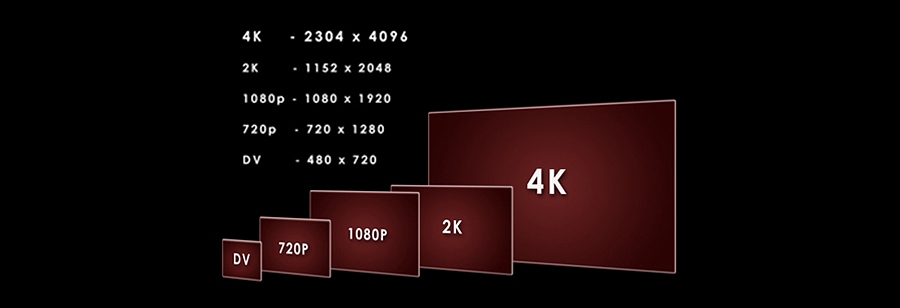

Now that 1080p is pretty well entrenched as the current home theater standard for HDTV and display resolution, the consumer electronics industry is already making moves to push us to new 4k screens. At a resolution of 4096×2304, that’s four-and-a-half times the number of pixels than we’re using now. Are you willing to make this leap, or do you question the need for it?

Honestly, I have to question the need for it. The jump from standard definition to high definition (even 720p) was a revolutionary improvement in the quality of watching movies and television at home. The increase from 720p to 1080p may be less apparent on TVs smaller than 30″, but is still clearly beneficial on larger screens. Frankly, for the vast majority of viewers, 1080p is a sweet spot where video images are richly detailed without visible pixel structure, even on large screens. Beyond that, it becomes a case of diminishing returns. Aside from projection users with extremely large screens (at least 100″), the increase in resolution from 1080p to 4k will not be visible to the eye.

In fact, viewing the content currently available to us from Blu-ray, DVD, Netflix, broadcast TV, etc. on 4k screens will require that those video images be upconverted to the higher resolution, which will introduce the possibility of visible scaling artifacts. I would expect Netflix and standard-definition sources like DVD to fare particularly poorly in this regard. They’d likely look better on the same size screen with less scaling.

Right now, consumers have no native 4k video sources available to watch. While that may change in the future (reportedly, Sony is hard at work on a new 4k format), we’ll still run into a problem of limited content availability. Most movies today that are either shot digitally or post-produced with a Digital Intermediate are permanently locked to 2k resolution or less. (Even ‘Avatar’ was shot with only 1080p cameras!) Very few movies are natively shot at 4k yet. Even among movies originally photographed on 35mm film, the majority have been transferred to video at 2k. Studios would need to rescan most of their old movies to create new 4k masters. Considering the sad state of catalog titles on Blu-ray now, can you really imagine a studio like Universal (notorious for dusting off aging DVD masters for reuse on Blu-ray) going to that trouble and expense?

Don’t get me wrong, I definitely see the benefit of 4k in movie theaters, and as an archival medium as studios convert their old films to digital. However, I see much less need for it in the home.

On the other hand, a brand new video format may bring with it some fringe benefits missing on Blu-ray, such as improved color depth (the so-called “DeepColor”) that would reduce the presence of color banding artifacts. That might be a good thing. Too bad it’s too late to implement that on our current Blu-ray standard now.

I also see great potential in using a 4k screen for passive 3D. A 4k screen can display two full 1080p images simultaneously without the need for active shutter glasses and the problems associated with those, such as flicker and headaches, not to mention the uncomfortable shutter glasses themselves.

Sony has already released one 4k projector to market (at a cost of $25,000!), and JVC has a few more affordable models that use a pseudo-4k format called e-Shift (which has a resolution of 3840 x 2160). Early adopters have been pleased with the results, but these same early adopters are also likely to have exceptionally large screens. More manufacturers are preparing to unleash 4k TVs in the coming months.

Do you see this as a welcome evolution in video technology, or a shameless cash-grab from an industry desperate to get us to buy new televisions all over again? Vote in our poll.

Alex

I need to know that there’s going to be plenty of media available in 4K before I take the plunge.

Tim Tringle

Seriously, I am a complete HD Snob and the fact is that most content these days is still restricted to SD. Half of all material on Netflix streaming is SD and until Comcast and other companies stop with the CAPS we won’t see that changing soon.

Until everything is in 1080p that I access and there is either a website that streams 4K I won’t be worrying about switching. If the transition to HD is any gauge it will take a minimum of 5 to 10 years to get 4K penetration to the same point we are currently at with 1080p

William Henley

YouTube started streaming 4k about a year ago. That being said, there may be 20 or so videos on there that actually has that resolution.

Cameron Hurtado

I only upgrade my televisions when my current one bursts into flames or does any other dramatic way of breaking. I actually just bought a Vizio Passive 3DTV and I couldn’t be happier. So unless this TV decides to commit suicide, I don’t see me upgrading for a looooooong time. Especially since my place isn’t big enough to justify a large enough screen for it to be worth it.

Rcorman

Unfortunately I don’t see 4K catching on for home use. The TV networks/broadcasters aren’t going to dump money into 4K after already upgrading to HD. Plus the movie studios do a bad enough job releasing (or not releasing) their catalog titles in 1080p right now.

Having said that, I would love 4K to catch on just so I could get a reasonably priced 4096×2304 TV that I could use as a computer monitor.

William Henley

I don’t see the point of 4k unless I decide to get a dramatically larger screen, which I may do in about 4-5 years. Even at 80 inches, 1080P looks REALLY good, from what I have seen in the stores that have them on display.

At 4k, I just think you are going to hit a wall of diminishing returns. And some manufactorors are already touting 8k displays as well.

I may consider myself an HD Snob, but there does come a point where you just really are not going to see a difference. I have a Vizio Passive 3D display now. It looks great. But with teh constraints of the room and the distance I sit from the TV, I doubt I would notice a higher resolution.

I also agree with you about video looking bad when upconverted. Many people I know, myself included, actually keep a tube TV or two around the house, mainly for watching older SD content on, just because it looks better.

Also, while I invested some in DVD, it is not nearly to the extent that I have invested in Blu-Ray (probably because I started my professional carrer about the time Blu-Ray came out, so I just didn’t have the funds before hand to invest in content). I have spent thousands on movies. I have no desire to rebuy everything, unless Vudu offers an HDX to 4K upgrade option.

I DO, however, want to see 4k and 8k displays at theaters, with content produced (not upconverted) for them. If I go to a movie theater, I expect to have a dramatically better experience than I can get in my home. Believe it or not, I still have a local theater that shows film that I go to from time to time, depending on the movie. Do I want better picture, or better sound? (AFAIK, and I know Josh can correct me on this, Film is limited to Dolby Digital, Dolby Stereo, Stereo, Mono, and SDDS (DTS is not actually stored on the film, but comes on optical discs that sync up to timestamps on the film).

So, yeah, no 4k for the home unless I decide to go 80 inch or larger, which won’t be for years, and even then, only if content is available.

I still don’t think its too late to upgrade the Blu-Ray specs. Blu-Ray 3.0 is way past due. I want 1080p on all framerates, not just 24, better support for PAL content, and to support 2.35:1 displays. Truthfully, it would be great if AVCHD became part of the Blu-Ray spec as well.

Josh Zyber

AuthorSDDS is dead, and DTS has abandoned the theatrical market. Digital and IMAX theaters today actually use multi-channel uncompressed PCM sound. Dolby processing may be applied on top of that if the movie has a Dolby 7.1 track, but the base format is PCM.

Legacy 35mm theaters continue to use Dolby SR or Dolby Digital audio.

William Henley

Right. So, right now, you have the choice to go see something in 2k Digital with awesome PCM sound, or you can go see something on 35mm film which has better picture quality, but is limited Dolby Digital.

This is why I make trips across town to see movies in IMAX Film!http://en.wikipedia.org/wiki/IMAX#Soundtrack

You get the advantage of film, and the advantage of PCM sound!

Sadly, this almost doesn’t matter anymore, as way too many films are edited at 2k. I would hope that if they are outputting to IMAX, they are not editing at 2k, but stupider things have happened.

Josh Zyber

AuthorPicture quality on film will depend on the condition of the print and how well the theater treats it. In most cases these days, digital is more consistent and better quality.

Even the best 35mm theatrical print is several generations removed from the source. Digital projection should be the same quality as the DI master.

Re: IMAX, aside from movies like The Dark Knight that have sequences shot on IMAX film, most DMR screenings are upconverted from 2k. In fact, digital IMAX theaters are 2k resolution.

William Henley

Right, but you also have IMAX documentaries. Most are still shot on film. I think Born to be Wild was actually shot with 4k cameras, and Hubble annoyingly had a lot of content shot with 2k, 1080p and SD cammeras that looked like crap on the 90 foot domes.

And yes, that is ture about digital, and films being several generatiosn removed from the source. I’ve seen some great-looking film, and some horrible-looking film. Its kinda hit and miss. If you can find a larger theater still using film, and go opening weekend, the film is usually pretty darn prestine. Used to, the dollar theaters got some really bad condition films, but as so many theaters have gone digital, my dollar theater has actually started getting some pretty darn-good-lookign film in the past year or so. There, though, they tend to leave the 3D filters on their digital projectors, so the film auditoriums usually look significantly better than the digital auditoriums.

No, Digital looks pretty darn good. My local Rave uses all Christie projectors, and they look amazing. The problem is, I think they use the same projectors in ALL their auditoriums. The smaller ones look fantastic. The larger ones, and the Extreme, you start to loose brightness and sharpness as the screen size increases. So for larger screens film tends to look better because of the higher sharpness. That is, if the source isn’t 2k.

(BTW, unless I am mistaken, Digital Imax uses Christie 2k projectors as well).

Truthfully, on the big screens, theaters need both brighter projectors and higher-res projectors, and studios need to start producing their masters at 4k or 8k. THIS is how you get people back into the theaters, by offering them an experience superior than what they can get at home. 2K isn’t THAT much higher than 1080p.

Truthfully, in the past couple of years, I haven’t seen that many movies at the IMAX, because, at 2K, there really is not a significant advantage over it and my local theater. And why pay $15 to get into the Imax if I can get the same experience for $8?

And why pay $8 if I can get the same experience at home?

Hence, theaters need to offer a unique experience, and to difienciate (oh my gosh, I just butchered teh spelling on that) between IMAX and the regular screens. I feel that, with a couple of exceptions, such as Dark Knight, that IMAX is living off of a legacy name without really offering a superior product. Now, when the Dark Knight was showing, I made it to the IMAX.

Josh Zyber

AuthorYes, digital IMAX theaters use two Christie 2k projectors converged together onto the same screen to increase brightness.

JM

Do home theater projector screens have a fine enough surface texture to reflect 8K without flaws?

Josh Zyber

AuthorMot likely not. 4k maybe, depending on the screen. (Acoustically transparent models will have a harder job of it.)

M. Enois Duarte

It depends on the specific piece of equipment. I already have amazing picture quality at my screen size, which often looks better than I remember in theaters.

Studios need to focus on making proper HD masters with the least amount of compression possible and with as little clean-up enhancements as possible. They should start learning to use the full 50GB for the movie alone and add a second disc for special features.

William Henley

Eh, yeah, but the codecs used now doesn’t mean that you are going to end up with a 50 gig file. This depends largely on the content of the film. I’ve had movies that I have compressed with AVC, and it doesn’t matter if I tell it to compress at 12Mbps or 30Mbps, I get almost the exact same filesize outputed (the difference is usually only a couple of hundred meg). The reason is, in variable bit rate, you have a maximum (and a minimal in some cases) bitrate, and usually a target bitrate. However, if the picture is not complex enough to need all that space, it won’t use it. Hence why you may not see a huge difference in file-sizes.

Movies such as Avatar that had a lot of visual information, however, would result in higher file sizes, as the picture information is more complex.

The majority of the issues you see on Blu-Ray are not a result of over-compression on the Blu-Ray, but rather a crappy encoder or too much tweaking (sharpening, DNR, etc). Its also possible that, when using multiple filters, some studios are actually compressing after every pass, meaning you are compressing a compressed file. I would assume that you do not have this issue with the major production houses – hopefully the people they hire for this stuff actually know what they are doing.

In any case, just because a movie doesn’t fill a full 50 gig does not mean that you are not getting the highest quality possible from that movie.

William Henley

BTW, on average, 1080p at either 24fps or 30fps (AVCHD), for about a 2 hour video, encoded in AVC, results in a filesize anywhere from 15 gig to 30 gig, depending on content. Nothing I have thrown at AVC has EVER resulted in a filesize larger than 30 gig. I am not saying its not possible (once again, Avatar is a perfect example), just saying that just because a movie doesn’t fill 50 gig (or even a whole 25 gig) doesn’t mean that you are not getting the best quality possible.

JM

LG is expecting 4K blu-ray to launch in 2013.

Sony is talking with studos to finalize the specs for 4K blu-ray.

Intel is forecasting a flood of 4K tablets, laptops, and monitors in 2013.

So I’ll probably get a 15″ laptop and an 84″ oled + PS4.

I hope the new standard includes 240 fps.

Only 7 months until CES…

Brian Hoss

I am very comfortable at the 1080p standard, and have been for four years. The pc game ceiling right now is 2560 x 1600, but it really takes a top of the line set-up to get there, or else other rendering effects or physics effects have to be toned down.

But working towards a 4k standard is fine, possibly even smart. Maybe a feature film like the Dark Knight Rises might be poorly suited for that level of detail(I know I absolutely enjoy 24 frame feature films), but nature films and other kinds of documentaries would make sense. Live content in general, like sports and concerts would also help to familiarize people with the benefits of a higher resolution.

If 4k becomes available in some theaters, like specialty theaters, and content is produced for it, it will trickle down to the high end home theater market. Mainstream adoption seems unlikely, unless the screens become as cheap as paper towels.

With Apple putting 2880 x 1800 displays in their high end Mac Pros, a higher res standard than 1080p may be closer than many people would think.

William Henley

Am I the only person smirking at a resolution that high in a laptop (or am I mistaking and that is actually the desktop Macs?) Seems pretty pointless on a screen that size.

Brian Hoss

I have laptop from 2008 with what I thought was a beautiful 1920 x 1200 17″ screen. Of course, it has always been impractical, and I actually use a 1366 x 768 13″ laptop that I bought in 2010.

2880 x 1800 MacBook Pros are laptops, and the screens are 15″. Their Nvdia 650M graphic cards won’t be able to run games anywhere close to that max resolution, and of course they arbitrarily do not support Blu-rays.

William Henley

Yeah, I have an 8 core PC. I may not have an nVidia 680 in it, but even at 8 cores, I have issues with doing gaming at 1920×1080 at times. If I had a 680, I am sure I would be able to, but if I had a 680 in only a quad-core laptop, I am sure I would start running into issues again. Now, if I had a 50 inch monitor, that kind of resolution may be useful if I was a heavy-duty content creator (like working in Photoshop or something, or having multiple Adobe Applications open at once). On a 15 inch laptop, text and other stuff would just be too small to be of any use.

My 15 inch laptop runs at 1680×1050 (the normal resolution for laptops this size), and there is a reason why laptops this size run at that resolution. At a distance of 18 to 24 inches, that is about the highest that the normal person can see. I am willing to bet that in a blind test (with both screens properly calibrated – I am sure the Apple display will probably have a better display than some cheapy, crappily calibrated display that some laptops ship with), I bet the average person cannot tell the difference between 1680×1050 and 2880×1800. Not on a screen that size.

I am sure that Apple is also using a glossy glass display, so make sure in the blind test the PC also has one. They do make them.

Its kinda like the iPhone 4. Truthfully, I do not see the difference between the display on it, my old iPhone 3, and my Samsung Galaxy. As I do not plan to hold it 3 inches from my eye, I don’t see the point of the higher resolution.

Truthfully, this sounds like an excuse to double the price of a piece of hardware to me.

Brian Hoss

In the Apple videos, they push how the screen resolution allows you to edit a 1080p video, while still having a lot of screen resolution to spare in the screen. This does seem half useful and all extravagant. The retina display models start at $2199.99, and their glossy screens combined with Apples high res uis make them pop. An Nvidia 650M (m for mobile) is probably way weaker than whatever desktop card you have.

I will disagree with the phone resolution though. I went from an iPhone 3G to a Samsung Captivate(Galaxy 1) to an iPhone 4S, and whenever I boot up the 3G, I really miss the resolution. On the 4S, I look at the Bonus View with 1/10″ body text and it is perfectly legible.

For me once I switch to a higher resolution display, its hard to switch back. The new Mac Pro displays just seem like a race car body with a lawnmower engine.

Brian Hoss

The Samsung Captivate (Galaxy)’s display is excellent though, but it tends to eat the battery.

William Henley

Yeah, I could edit 1080p video at this resolution, but I can do that now at 1680×1050. I don’t really see the “more real estate” argument coming in – if you crank up the resolution, you are going to have to crank up the size of your graphics and fonts to make up for it. Running Adobe Premiere or Final Cut in their default settings at this resolution on a 15 inch monitor would make one go blind from your work area being so tiny. And if you increase the size of the icons and fonts and everything else anyways, you kind of defeat the purpose of the higher resolution anyways as you have to start copying pixels or whatever, which will actually end up giving you a blocky look.

I am sorry, but I really just do not see where Apple is going with this. I cannot see a practical application for this resolution on a 15 inch screen.

William Henley

Shoot, working at 1920×1080 on a 21 inch screen, I practically have to sit 6-12 inches away from the screen, and squint. You double that resolution and put on a 15 inch screen, its just stupid.

JM

Does 2048×1536 on the new iPad look worse than 1024×768 on the old?

Brian Hoss

I think the resolution of Apple’s new Mac pro display is extravagant, impractical, and marginalized. When the new Mac Pros come in a two years and have a new big feature like a touch screen, these displays may seem even more redundant. I just won’t go so far as to say that the higher resolution is useless, and other manufactures like HP will probably respond competitively.

JM

Glossy magazines are printed at 600 dpi.

Digital screens are trying to catch up with paper.

William Henley

Jane, that is probably the best argument I have heard yet for this type of resolution.

That being said, when you are working on this kind of stuff, you usually are not working at 100% size, you usually blow stuff up so that you can easily see fine detail. You zoom to 100% size to get an idea of what the finished product looks like.

I also do not see a graphic artist working on a 15 inch laptop. They are going to be sitting in front of an Apple Studio Display or Cinema Display (or whatever Apple is calling them now). Now, if you have a 24″ or larger monitor sitting on your desktop and are doing graphic art, a Retina display (4k or even 8k display) might actually make sense.

I guess what I am going at is that this resolution on a screen this size seems impratical to me.

But you do have a really good argument, and its the best one I have heard yet.

motorheadache

I have a 1080p projector and the picture looks amazing– a very “theater like” image from Blu-Ray. I think a further jump in quality is completely superfluous for home theater. I mean, shit, there’s still plenty of people that don’t care about the difference between DVD and Blu-Ray.

Even if I ended up with a 4K projector in the future just because that was the new standard, I definitely wouldn’t be rebuying almost anything that I had on Blu-Ray in whatever 4K format was coming out.

Aaron Peck

I can’t wait until they reach a format with a picture so detailed my eyes won’t be able to take it causing them to burst from their sockets.

JM

NHK’s Integral 3D will make your balls pop.

William Henley

You know what, I will upgrade my movie collection again when holodecks are invented!

M. Enois Duarte

I don’t know about. They always seem to malfunction more often than Blu-rays or the players.

http://www.youtube.com/watch?v=68qAw1L-d5E

William Henley

Holoaddiction and falling in love with holo characters are malfunctions? :-p

Truthfully, most of these issues could be avoided if they would pass some type of regulation preventing the crew from using the holodeck during battle or strange spacial anomilies.

August Lehe

This question is a bit premature for me since I will just be entering the 1080 world this winter (with a screen larger than 40 inches). Maybe in a few years, when my Blu Ray collection is fairly ‘complete’ – like 500 titles!

Jason

I sit about 10 feet from a 110″ 16:9 screen with a 1080p Epson 5010 projector. If I had to sit any closer to “appreciate” the added resolution of 4K it would be like watching a tennis match from the the judges perch.

There have been plenty of discussion as for why 4K will probably never catch on… expense of production, expense of consumer level products, expense of marketing a product to people who still don’t understand aspect ratios or have a 50″ 1080p set 15 ft away from their couch… just to name a few. And I have to agree. The difference between SD and 1080p on a 42″ screen is noticeable, even to novice eyes. But the difference of 4K from 1080p is only appreciable on much larger sets.

4K will come around but I feel it’ll have an existence much like that of LaserDisc. Few will bother with it and it’ll go away due to lack of support.

Unfortunately or fortunately I believe that Blu-ray will be the last of the physical media. Too many studios are trying to advance digital media and cloud services for it not to catch on… eventually. I love my physical media and am an avid collector with a Blu-ray/Dvd collection of over 1200 titles, 5000 CDs and 1000s of books. I would love to see physical media last forever but I fear it won’t. And in a world where the general populist is okay with “instant 1080p” downloads on a cloud server there is no room for 4K to survive.

JM

The review of Sony’s 4K projector at projectorreviews.com is a must read.

4K makes 1080p blu-rays look significantly better.

4K solves the problem of 3D softness.

4K footage on a 124″ 2.35:1 screen = breathtaking.

4K includes a DCI mode, for the full color gamut used in theaters.

You can’t just think of 4K as extra pixels. It’s a much deeper spec.

Jonathan Bain

This is rediculous. It’s impressive fair enough but for God’s sake. The entire world got along fine with TVs at 480i (not even P!) for almost 80 years and then suddenly in the space of what? 7 years we’ve jumped to 720p, then 1080i which was a gimmick, then 1080p and now 4k and even 8k?

What…is…the…need.

I would consider buying one MAYBE in 2020 or 2025 but somehow I know I’m going to get force ably boarded onto the gravy train and will have to get one far before then.

paulb

While I would like the improved color and other aspects, I would jump at good 4k for a larger set and especially for a projector.

Like HDTV and good scaler did for DVD, 4k and existing scaler will make BR and HDTV look better (as others have noted)

Also, think about other uses as computers and other devices are hooked up. Currently reading text/websites etc on a larger 1080p set isn’t good as you can see all the aliasing. Simliar argument as to apple’s “retinal” (I hate that fake word) screen which plenty of people say wasn’t needed but even on the small ipad and mac screens, make a stunning difference.